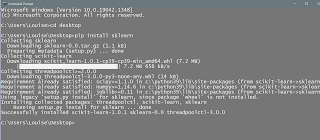

Back to the AI lecture. Installed a new module:

* * *

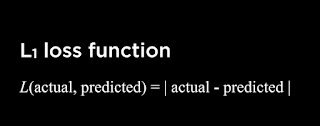

Left-off with the issue of loss functions to evaluate our model. Loss1 shows

a simple count of cases that the model doen't pick up. L2 penalizes a large

discrepency that is not picked up:

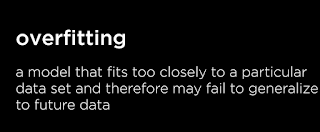

Overfitting the data can sometimes lead to a model that describes the data perfectly,

but is a weak predictor for that very reason.

The way around this problem is to add a complexity element, whose value we will

choose to weed out too precise fits.

One can easily enough divide our data between a learning set and a testing set.

Best to do this in multiple configurations.

Python has libraries that will handle all this for us. This is sklearn I have installed.

Working with data on serial numbers from US bank notes, and knowing which are

genuine and which are counterfeit, one can see how the different models perform:

No comments:

Post a Comment