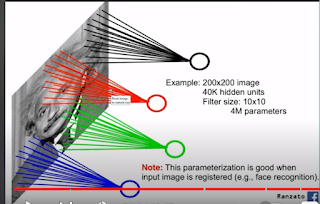

Getting a sense of why convolutional layers are used. BECAUSE

they are locally connected, rather than fully connected. This saves agreat

deal of computing time and resources.

Once this work is done, results are 'pooled' (see Pytorch 13).

Need to appreciate, as well, that with a color image, there will be a 'height, width and

color' channel for each of the three RGB colors, on a 255 scale.

largest value, average pooling takes an average. This is called downsampling.

as pooling ones. All in the interest of reducing the scale of computations needed.

Once out of the last pooling, the information is flattened to a one-dimential vector

which can then feed into fully connected operations.

No comments:

Post a Comment